Kubernetes Deployment Strategies -Blue/Green, Canary, Rolling Updates with Yaml code

Kubernetes Deployment Strategies: A Complete Guide

================================================

================================================

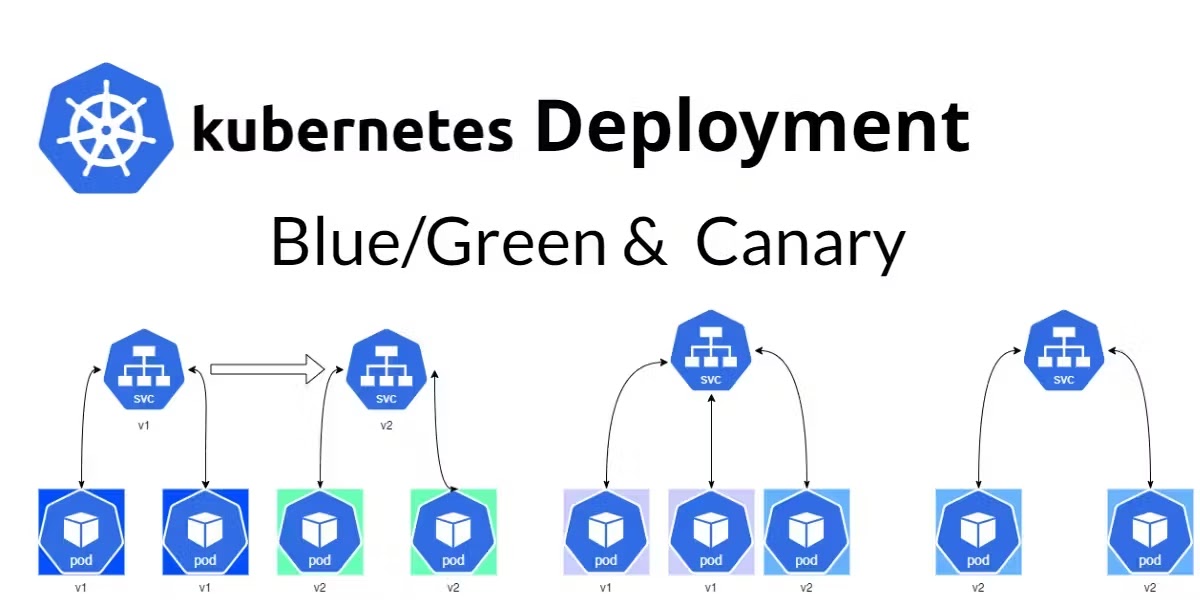

Kubernetes has revolutionized application deployment, making it easier to manage, scale, and roll out new versions. However, choosing the right deployment strategy is crucial to minimize downtime and ensure a smooth transition between application versions. In this blog, we will explore the most common Kubernetes deployment strategies: Blue-Green Deployment, Canary Deployment, and Rolling Updates.

================================================

1. Blue-Green Deployment

What is Blue-Green Deployment?

Blue-Green Deployment is a strategy that involves maintaining two separate environments, Blue (current version) and Green (new version). The idea is to deploy a new version in the Green environment while keeping the Blue environment live.

How It Works?

- The Blue environment serves live traffic.

- A new version is deployed in the Green environment and tested.

- Traffic is switched from Blue to Green when the new version is stable.

- The Blue environment is kept as a backup in case a rollback is needed.

Pros:

✅ Zero downtime during deployment.

✅ Easy rollback by switching traffic back to Blue.

✅ Ensures comprehensive testing before going live.

Cons:

❌ Requires double the infrastructure, increasing costs.

❌ Database migrations must be carefully handled to avoid conflicts.

=============================================================

Implementation in Kubernetes

apiVersion: v1

kind: Service

metadata:

spec:

selector:

app: my-app-green # Switch between blue and green

ports:

- protocol: TCP

port: 80

targetPort: 8080

Full Blue-Green Deployment Example

1. Deploy the Blue Environment

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-blue

spec:

replicas: 3

selector:

matchLabels:

app: my-app-blue

template:

metadata:

labels:

app: my-app-blue

spec:

containers:

- name: my-app

image: my-app:v1

ports:

- containerPort: 8080

2. Deploy the Green Environment

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-green

spec:

replicas: 3

selector:

matchLabels:

app: my-app-green

template:

metadata:

labels:

app: my-app-green

spec:

containers:

- name: my-app

image: my-app:v2 # New version

ports:

- containerPort: 8080

3. Load Balancing with a Service

apiVersion: v1

kind: Service

metadata:

name: my-app-service

spec:

selector:

app: my-app-blue # Initially pointing to blue

ports:

- protocol: TCP

port: 80

targetPort: 8080

4. Traffic Switch to Green Modify the Service selector to point to my-app-green:

apiVersion: v1

kind: Service

metadata:

name: my-app-service

spec:

selector:

app: my-app-green # Now pointing to green

ports:

- protocol: TCP

port: 80

targetPort: 8080

name: my-app-service

This strategy ensures that traffic is instantly switched between the two environments, providing a seamless update process.

================================================

2. Canary Deployment

What is Canary Deployment?

Canary Deployment is a progressive rollout strategy where a small percentage of users are exposed to the new version before a full deployment.

How It Works?

- Deploy a small number of pods running the new version.

- Gradually increase traffic to the new version while monitoring performance.

- If the new version is stable, increase traffic until full rollout.

- If issues arise, rollback is easier due to limited exposure.

Pros:

✅ Minimizes risk by exposing only a fraction of users.

✅ Allows real-time monitoring of performance and stability.

✅ Easier rollback if problems occur.

Cons:

❌ Requires a traffic splitting mechanism (Service Mesh like Istio or Nginx Ingress).

❌ Can add complexity in managing metrics and rollouts.

Implementation in Kubernetes

Using Istio VirtualService:

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: my-app-vs

spec:

hosts:

- my-app.example.com

http:

- route:

- destination:

host: my-app-service

subset: stable

weight: 90 # 90% traffic to stable

- destination:

host: my-app-service

subset: canary

weight: 10 # 10% traffic to new version

================================================

3. Rolling Update

What is Rolling Update?

Rolling Update is the default Kubernetes deployment strategy where the old version is gradually replaced with the new version without downtime.

How It Works?

- Kubernetes replaces pods one by one with the new version.

- The number of available replicas is maintained to prevent downtime.

- If a new pod fails, Kubernetes stops the rollout.

Pros:

✅ No downtime, as the old version is gradually replaced.

✅ No need for extra infrastructure.

✅ Simple to configure using Kubernetes Deployments.

Cons:

❌ If issues arise, rollback is slower than Blue-Green.

❌ Partial downtime can occur if pods take time to start.

Implementation in Kubernetes

Using Deployment strategy:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app

spec:

replicas: 3

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

template:

spec:

containers:

- name: my-app-container

image: my-app:v2 # New version ===============================================

Choosing the Right Strategy

| Strategy | Zero Downtime | Rollback Speed | Complexity | Cost |

|---|---|---|---|---|

| Blue-Green | ✅ Yes | ✅ Fast | ❌ High | ❌ High |

| Canary | ✅ Yes | ✅ Fast | ❌ Medium | ✅ Low |

| Rolling Update | ✅ Yes | ❌ Slow | ✅ Low | ✅ Low |

When to Use Which Strategy?

- Use Blue-Green when you need instant rollback and have the budget for duplicate infrastructure.

- Use Canary when you want gradual rollout with real-time monitoring.

- Use Rolling Update for simple deployments with minimal risk.

===============================================

Conclusion

Kubernetes provides multiple deployment strategies to ensure smooth application updates while minimizing downtime and risk. The right choice depends on your application’s complexity, traffic sensitivity, and rollback requirements. Whether you choose Blue-Green, Canary, or Rolling Update, understanding their trade-offs will help you make informed decisions.

Which deployment strategy are you using in your Kubernetes environment? Let me know in the comments! 🚀

Comments

Post a Comment